If there were any features pushing the class label higher they would be shown in red. The above explanation shows four features each contributing to push the model output from the base value (the average model output over the training dataset we passed) towards zero. # plot the SHAP values for the Setosa output of the first instance shap. # use Kernel SHAP to explain test set predictions explainer = shap. iris(), test_size = 0.2, random_state = 0)

# train a SVM classifier X_train, X_test, Y_train, Y_test = train_test_split( * shap.

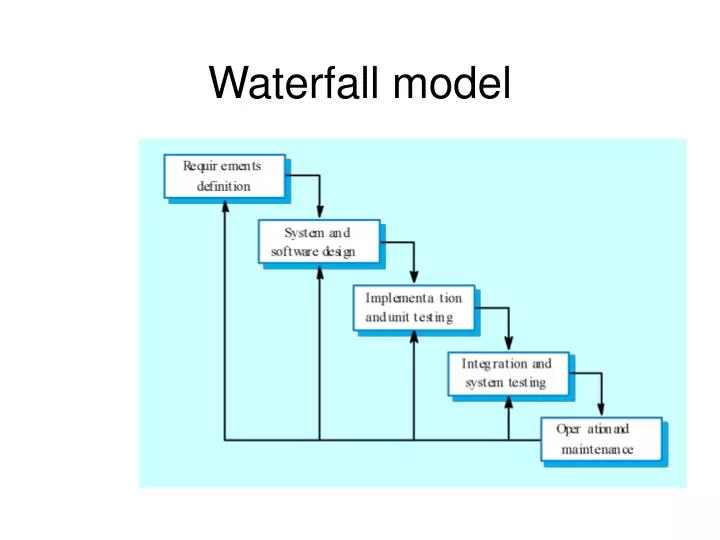

#Waterfall approach code#

model_selection import train_test_split # print the JS visualization code to the notebook shap. Below is a simple example for explaining a multi-class SVM on the classic iris dataset. Kernel SHAP uses a specially-weighted local linear regression to estimate SHAP values for any model. Model agnostic example with KernelExplainer (explains any function) By using ranked_outputs=2 we explain only the two most likely classes for each input (this spares us from explaining all 1,000 classes).

Red pixels represent positive SHAP values that increase the probability of the class, while blue pixels represent negative SHAP values the reduce the probability of the class. Predictions for two input images are explained in the plot above. image_plot( shap_values, to_explain, index_names) vectorize( lambda x: class_names)( indexes) # get the names for the classes index_names = np. shap_values( map2layer( to_explain, 7), ranked_outputs = 2) Local_smoothing = 0 # std dev of smoothing noise # explain how the input to the 7th layer of the model explains the top two classes def map2layer( x, layer):įeed_dict = dict( zip(. # load the ImageNet class names url = "" fname = shap. backend as K import numpy as np import json import shap # load pre-trained model and choose two images to explain model = VGG16( weights = 'imagenet', include_top = True) vgg16 import preprocess_input import keras. In the example below we have explained how the 7th intermediate layer of the VGG16 ImageNet model impacts the output probabilities.įrom keras. If we approximate the model with a linear function between each background data sample and the current input to be explained, and we assume the input features are independent then expected gradients will compute approximate SHAP values. This allows an entire dataset to be used as the background distribution (as opposed to a single reference value) and allows local smoothing. Deep learning example with GradientExplainer (TensorFlow/Keras/PyTorch models)Įxpected gradients combines ideas from Integrated Gradients, SHAP, and SmoothGrad into a single expected value equation. Note that for the 'zero' image the blank middle is important, while for the 'four' image the lack of a connection on top makes it a four instead of a nine. The sum of the SHAP values equals the difference between the expected model output (averaged over the background dataset) and the current model output. The input images are shown on the left, and as nearly transparent grayscale backings behind each of the explanations. Red pixels increase the model's output while blue pixels decrease the output. The plot above explains ten outputs (digits 0-9) for four different images. or pass tensors directly # e = shap.DeepExplainer((, ), background) shap_values = e. # explain predictions of the model on four images e = shap.

include code from import shap import numpy as np # select a set of background examples to take an expectation over background = x_train, 100, replace = False)] TensorFlow models and Keras models using the TensorFlow backend are supported (there is also preliminary support for PyTorch): Note that some of these enhancements have also been since integrated into DeepLIFT. The implementation here differs from the original DeepLIFT by using a distribution of background samples instead of a single reference value, and using Shapley equations to linearize components such as max, softmax, products, divisions, etc. text( shap_values)ĭeep learning example with DeepExplainer (TensorFlow/Keras models)ĭeep SHAP is a high-speed approximation algorithm for SHAP values in deep learning models that builds on a connection with DeepLIFT described in the SHAP NIPS paper. # visualize the first prediction's explanation for the POSITIVE output class shap. # explain the model on two sample inputs explainer = shap. pipeline( 'sentiment-analysis', return_all_scores = True) Import transformers import shap # load a transformers pipeline model model = transformers.

0 kommentar(er)

0 kommentar(er)